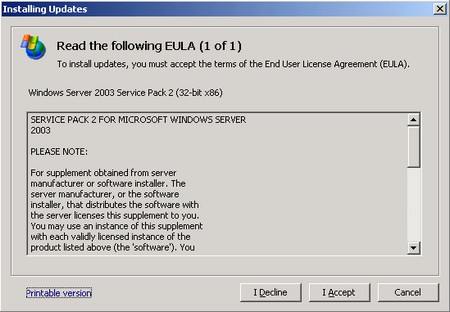

Susan Bradley’s blog tagline ought to be “Protecting you from your own stupidity” because she fought for the SP2 EULA from the day it was prematurely made available as a High Priority update on Microsoft Update. We all have a tendancy to trust Microsoft, sometimes trust it too much. Such is the case with updates, particularly to the systems that we look at on part-time only basis. Today this hard fought evangelical EULA is up on Microsoft Update.

Why? Well, Service Pack of any sort is kind of a big deal. Despite Cousin Joel’s notion that it’s easier to consume it still introduces large scale changes to your operating system and the entire network environment. So whats the big deal with an EULA? Well, it’s just another reminder put up there to make sure you’re about to do something that you might want to think about.

Why? Because you don’t want to be this guy:

The blog notes and instructions from the SBS blog have all been heeded. 32

hours of talking with tech support on the phone, changing the nic card,

updating all the drivers, and still, the system is dogging to the point of

being painful. Here is the unexplainable issue: I have 5 new machines, all

came from Dell with XP Pro installed. They all had to be flattened and XP

Pro re-installed. They worked great until SP2 was installed. Now they take

5 minutes to open an 8MB spreadsheet they used to open in 20 seconds. My 3

old machines were upgraded from W2K to XP Pro and they worked fine before SP2

was installed. Afterward, they are slower, but work better than the new

machines. The old machines can still run ACT 6.0. The new machines fail

with a program error after opening the database. Explain that!All file access to the network is so slow now. Don’t suggest anything

previously mentioned in the blog. 4 MS engineers can verify that all the

tricks have been done as outlined in this blog.There must have been some other deep layer of connectivity between the

server and workstations that does some kind of handshaking/security checking

that is slowing things down. Excel seems to have been hit the hardest. It

is a terrible thing to see a 2GB 4Ghz Dual Core processor take 60 seconds to

copy 10 files of 80K each from the network to the local drive.I have 5 SBS 2003 servers and 3 of them are just fine. This one is dying

and SP2 failed to install on the other one. I am afraid to work the issue as

it might turn out like this one.I really do need ideas and help short of flattening the server, as that may

not help anyway. Ideas, anyone?

I feel bad for this guy, I really do.

However, a part of me wonders just how heavy the rock was. You know, the one that he was under since Microsoft started releasing service packs. As painful as the above is to read, and as painful as this process has been for him, this outlines the fundamental lack of respect for change management we have in the IT industry.

First, where is the full backup of the server that this was done on. At the very least this would have allowed him to take the server back to the last known good configuration.

Second, where is the test system on which he checked Act 6.0 for compatibility?

Third, never change more than one thing. If you installed the Service Pack and it broke things, do not proceed to install drivers (that likely have not been tested with the said service pack) and do more exotic changes.

Fourth, test, test, test, test. Forget about the stuff you should have done before you patched, too late to setup a test vm, too late to do a full backup, too late to check the app vendor for advisories related to the patch, too late. You’re patched, there is a whole new world on your network. Isn’t the first thing to check all the workstations and rerun MBSA, performance testing, reset the performance counter on both server and workstations, build new baselines, etc? If not, why?

Perparing for a Service Pack

There are a few simple things you can do to minimize your chances of a 35 hour support call:

1. Check the main application vendor for the customer and make sure their products are tested and supported on the new service pack. Service Packs are available months in advance and vendors can test/advise whether you should update or not.

2. Reboot the server.

3. Back up the server, full backup or image.

4. Download the service pack.

5. Disconnect the server from the network.

6. Apply service pack.

7. If all is well, connect one workstation to the server to test the performance, application compatibility, access policies, etc.*

8. If all is well, reconnect everything to the network.

9. Rebuild your performance baselines.

10. Create a new full backup.

* – The last thing you want is to start storing live data on the server that you may need to restore to the previous version due to major problems. By placing the server offline you don’t have to deal with email that was delivered to the server that you will now lose, comitted data, etc, etc.

Now I realize that points #5 and #9 are in the same category as “Don’t eat pizza, it’s not good for you” and “Did you know that smoking causes cancer” but those are really the two things that will limit your exposure and tell you that there are problems before its far too late.

Got your own change management tip? Blog it or post a comment.

For the less coherent, more grammatically correct realtime insight, follow me on Twitter at

For the less coherent, more grammatically correct realtime insight, follow me on Twitter at

Pingback: Rebooting a SBS box gives me just enough time to visit to the ladies room actually... - E-Bitz - SBS MVP the Official Blog of the SBS "Diva"

Pingback: Change Management and the Small Business - Tim Long